Reinforcement Learning with Human Feedback

One of the key improvements in models like ChatGPT or GPT-4 relative to its predecessors has been their ability to follow instructions. The genesis of this capability has its roots on a technique known as reinforcement learning with human feedback(RLHF) outlined in a 2017 paper. The core idea of RLHF is to extend LLM’s core feature of predicting the next word with the ability of understanding and fulfilling human requests. This is done by reformulating language tasks as a reinforcement learning problem.

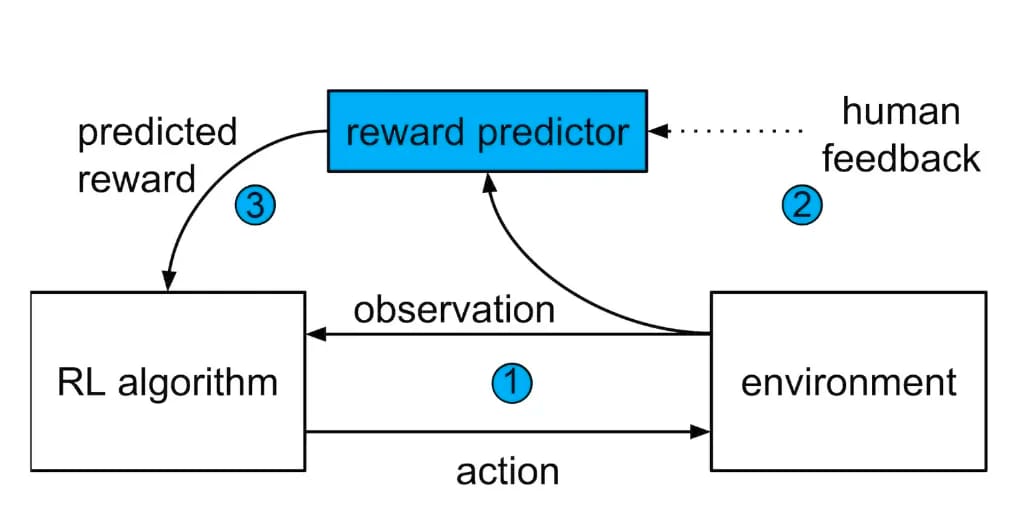

In a traditional LLM, we could have infinite answers to a given prompt and traditional unsupervised learning techniques are not very good at discriminating those. Formulating that problem in the reinforcement learning domain, we could train an agent to formulate a policy based on what outputs are better aligned with the human prompt. Conceptually, RLHF can be modeled in three steps:

I. Pretraining: Training reinforcement learning models can be incredibly taxing in terms of time and computational resources. To address this challenge, RLHF, start with a pretrained model that is able to produce coherent outputs.

II. Reward Model: The second phase of RLHF focuses on creating a reward model that takes a sequence of text as input and returns a numerical score representing the alignment of that input with a given human preference.

III. Reinforcement Learning Loop: This phase of RLHF architects the complete reinforcement learning loop in which the LLM becomes the agent which takes several prompts from the training dataset and produces an output. This output is passed to the reward model which scores its alignment with human preferences. The LLM is then updated to reflect the preferences score.

Even though the original RLHF research dates back to 2017, it is just recently that this technique has become a cornerstone of the new generation of LLMs. RLHF methods are one of the most active areas of research in the LLM space.

🔎 ML Research You Should Know About: The RLHF Paper

In Deep reinforcement learning from human preferences, researchers from OpenAI introduced the original RLHF technique.

Why it is so important? RLHF has become a key element of models such as ChatGPT and GPT-4.

Summary: RLHF utilizes small amounts of feedback from a human evaluator to guide the agent’s understanding of the goal and its corresponding reward function. The training process is a three-step feedback cycle. The AI agent starts by randomly acting in the environment. Periodically, the agent presents two video clips of its behavior to the human evaluator, who then decides which clip is closest to fulfilling the goal of a backflip. The agent then uses this feedback to gradually build a model of the goal and the reward function that best explains the human’s judgments. Once the agent has a clear understanding of the goal and the corresponding reward function, it uses RL to learn how to achieve that goal. As its behavior improves, it continues to ask for human feedback on trajectory pairs where it’s most uncertain about which is better, further refining its understanding of the goal.

The original idea of RLHF had very little to do with LLMs are was originally tested in video games like Atari. The tests showed that agents trained with RLHF value different capabilities that those trained without it. It wasn’t long before researchers starting adopting RLHF to LLM domains. In general, adopting RLHF to LLMs can be summarized in three key steps:

1. Pretraining a language model (LM).

2. Training the reward model.

3. Fine-tuning the LLM with reinforcement learning.

In the specific case of ChatGPT, OpenAI followed an approach that by began by collecting a dataset of human-written demonstrations on prompts submitted to their API, which is then used to train their supervised learning baselines. Next, a dataset of human-labeled comparisons between two model outputs on a larger set of API prompts is gathered. A reward model (RM) is trained on this dataset to predict which output the labelers would prefer. Finally, the RM is used as a reward function, and the GPT-3 policy is fine-tuned to maximize this reward using the proximal policy optimization(PPO) algorithm. Human AI trainers input conversations in which they played both sides: the user and the AI assistant. The trainers have access to the suggestions produced by the model in order to generate their responses.

The ideas behind RLHF pioneered in OpenAI’s paper have become one of the foundational elements of the new generation of LLMs.

🤖 ML Technology to Follow: Transformer Reinforcement Learning is One of the Top Libraries for RLHF

Why should I know about this: Transformer Reinforcement Learning trains Hugging Face’s transformers using reinforcement learning with PPO.

What is it: Transformer reinforcement learning(TRL) can be utilized to train transformer language models with Proximal Policy Optimization (PPO). The library is constructed on top of the transformers library by Hugging Face, making it possible to directly load pre-trained language models through transformers. Currently, TRL supports most decoder architectures and encoder-decoder architectures.

Notable features of TRL include:

PPOTrainer: A PPO trainer designed for language models that only requires (query, response, reward) triplets to optimize the language model.

AutoModelForCausalLMWithValueHead & AutoModelForSeq2SeqLMWithValueHead: A transformer model with an additional scalar output for each token that can be utilized as a value function in reinforcement learning.

The process of fine-tuning a language model via PPO can be broken down into three main steps:

Rollout: The language model generates a response or continuation based on a query, which could potentially be the start of a sentence.

Evaluation: The query and response are evaluated through a function, model, human feedback, or some combination thereof. The crucial aspect is to yield a scalar value for each query/response pair.

Optimization: This is the most intricate step. During the optimization phase, the query/response pairs are employed to calculate the log-probabilities of the tokens in the sequences. This is achieved through the use of both the trained model and a reference model (usually the pre-trained model before fine-tuning). The KL-divergence between the two outputs serves as an additional reward signal to ensure that the generated responses do not deviate too far from the reference language model. Finally, the active language model is trained with PPO.

Using TRL is relatively simple. The following example queries an LLM to produce an output that is then evaluated for either a human or an AI agent.

import torch

from transformers import AutoTokenizer

from trl import PPOTrainer, PPOConfig, AutoModelForCausalLMWithValueHead, create_reference_model

from trl.core import respond_to_batch

model = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2')

model_ref = create_reference_model(model)

tokenizer = AutoTokenizer.from_pretrained('gpt2')

ppo_config = PPOConfig(batch_size=1,)

query_txt = "This morning I went to the "

query_tensor = tokenizer.encode(query_txt, return_tensors="pt")

# get model response

response_tensor = respond_to_batch(model_ref, query_tensor)

# create a ppo trainer

ppo_trainer = PPOTrainer(ppo_config, model, model_ref, tokenizer)

# define a reward for response

# (this could be any reward such as human feedback or output from another model)

reward = [torch.tensor(1.0)]

# train model for one step with ppo

train_stats = ppo_trainer.step([query_tensor[0]], [response_tensor[0]], reward)

How can I use it: TRL is open source and available at https://github.com/lvwerra/trl .